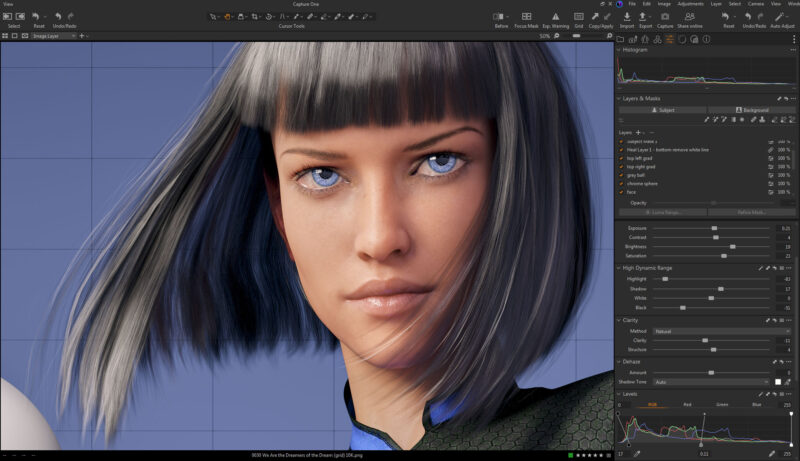

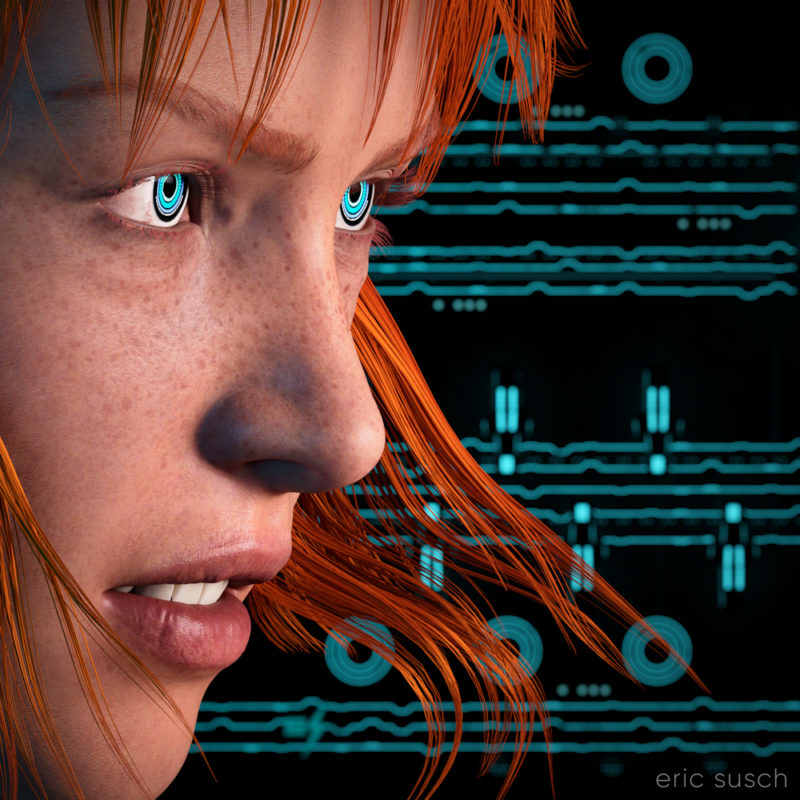

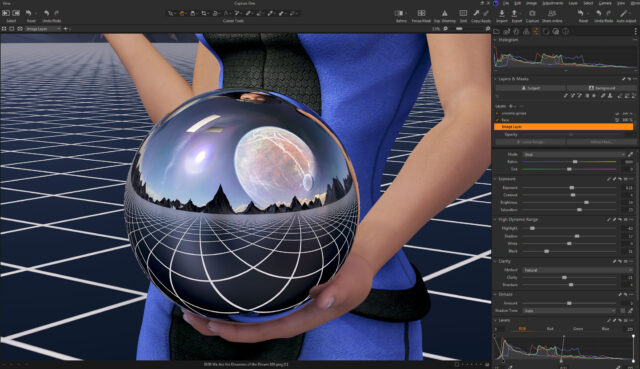

After my first experience making a puzzle I decided to update the artwork before printing another test puzzle. I always thought the sky was a little plain in this piece especially since the sky reflected in the chrome sphere has a planet and clouds. The plain blue at the top was also more difficult to piece together as a puzzle. To give it some detail I decided to put a grid across the entire sky. I think thematically this new grid shows that the image reflected in the sphere is actually a future dream. The actual metaverse environment isn’t built yet.

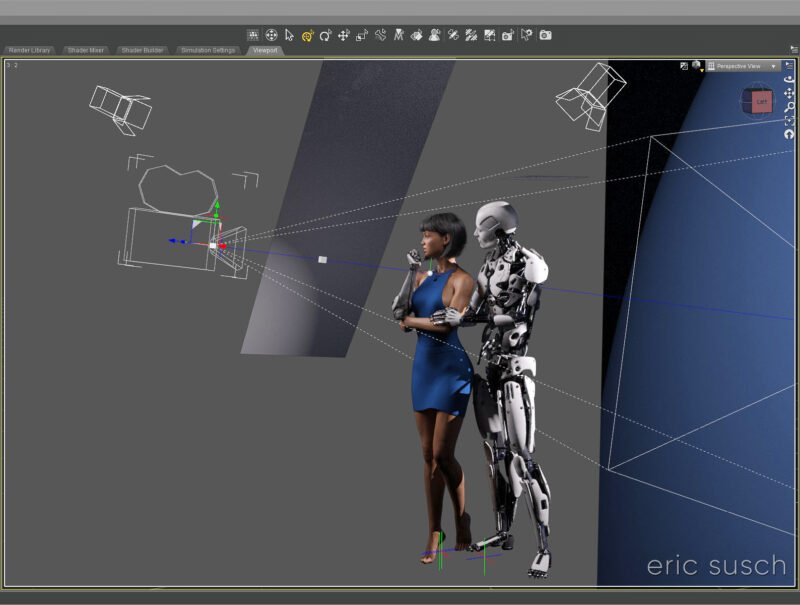

I actually went all the way back into DAZ Studio to place the grid in 3D space and re-render the entire scene from the beginning. I also took the chance to re-adjust the camera slightly to give more room around the edge of the frame for print bleed. Final color correction is also slightly different. If you’re re-doing it anyway, why not fix the things that bug you?

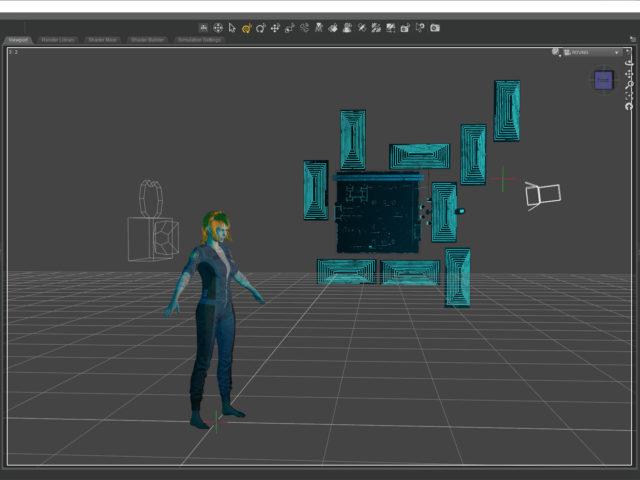

I also took the opportunity to fix another problem that I previously didn’t know how to fix and which has driven me insane since I first rendered the image. In the original you’ll notice that the left armpit of her “space samurai” outfit is screwed up.

That’s because the clothing mesh is getting confused between the arm and the torso which are colliding. I was able to grab the clothing mesh with a DAZ Studio plugin and pull it back toward the torso. I actually had to stretch it quite a ways into the center of the character like a rubber band to get this small area to look better.

That’s because the clothing mesh is getting confused between the arm and the torso which are colliding. I was able to grab the clothing mesh with a DAZ Studio plugin and pull it back toward the torso. I actually had to stretch it quite a ways into the center of the character like a rubber band to get this small area to look better.

These changes were relatively small but I think they make a big difference. Can’t wait to see this new version printed out.

These changes were relatively small but I think they make a big difference. Can’t wait to see this new version printed out.

Created in DAZ Studio 4.22

Created in DAZ Studio 4.22

Rendered with Iray

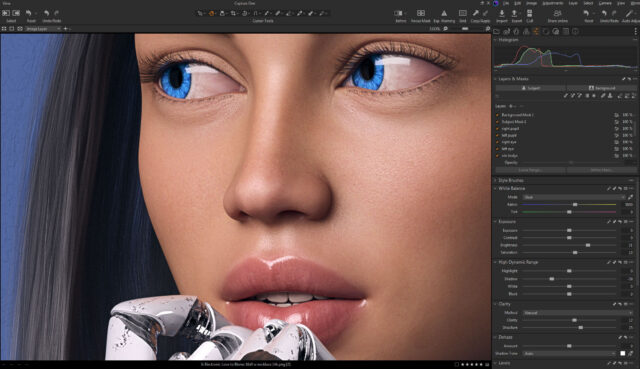

Color Correction in Capture One