This piece was a marathon to create. A perpetual artistic labor. Unending. Frustrating. We had remodeled our kitchen and saved a space on the wall for an art piece, complete with it’s own special spotlight. The kitchen had taken over a year to complete and this art piece had to live up to that. It needed to be perfect. Constantly second guessing my creative choices, it took me a year to finish this, sometimes setting it aside, then diving back in to see if I could perfect it. Today I’m finally calling it done and I’m presenting it here hoping I haven’t completely strangled the emotional life out of it.

This piece was a marathon to create. A perpetual artistic labor. Unending. Frustrating. We had remodeled our kitchen and saved a space on the wall for an art piece, complete with it’s own special spotlight. The kitchen had taken over a year to complete and this art piece had to live up to that. It needed to be perfect. Constantly second guessing my creative choices, it took me a year to finish this, sometimes setting it aside, then diving back in to see if I could perfect it. Today I’m finally calling it done and I’m presenting it here hoping I haven’t completely strangled the emotional life out of it.

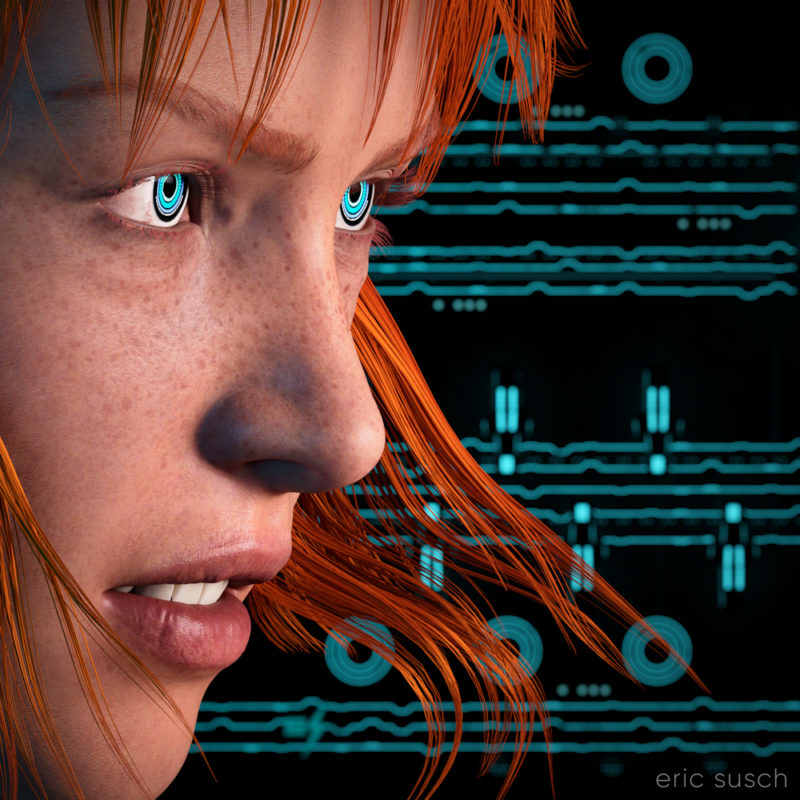

Some of the initial criteria: It was designed as a large piece, three feet square, so it needed to be extremely detailed. It had to match the modern aesthetic of our new kitchen. Colors needed to be white and gray with a blue accent. It needed to be bright, not the dark moody work I usually gravitate towards. I wanted two characters – an android and a cyborg – in love yet troubled, going through the same ups and downs we all do. …And it needed to be good. That was the most important criteria. It needed to be good.

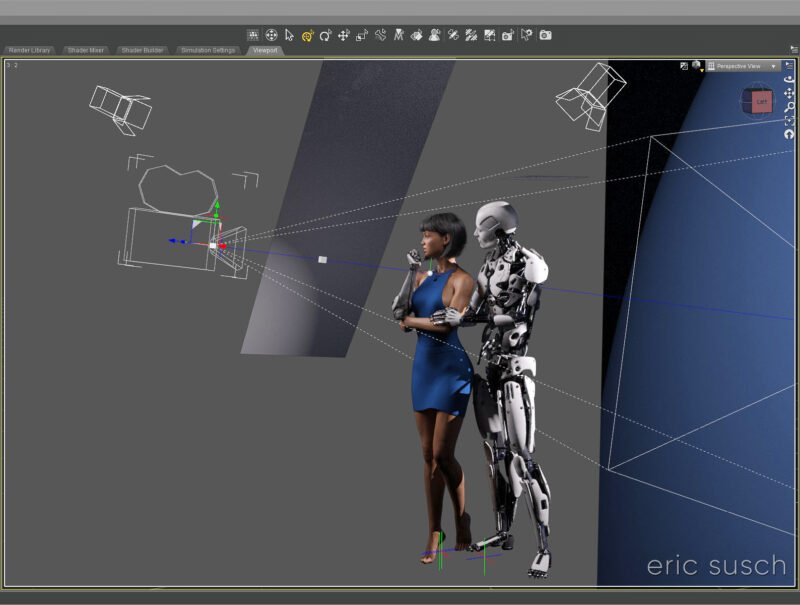

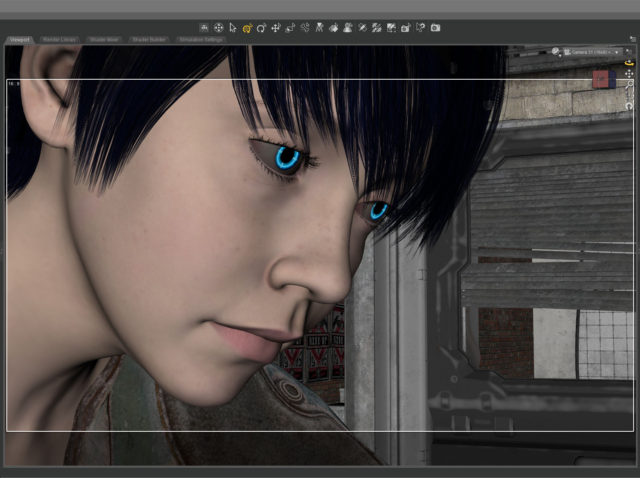

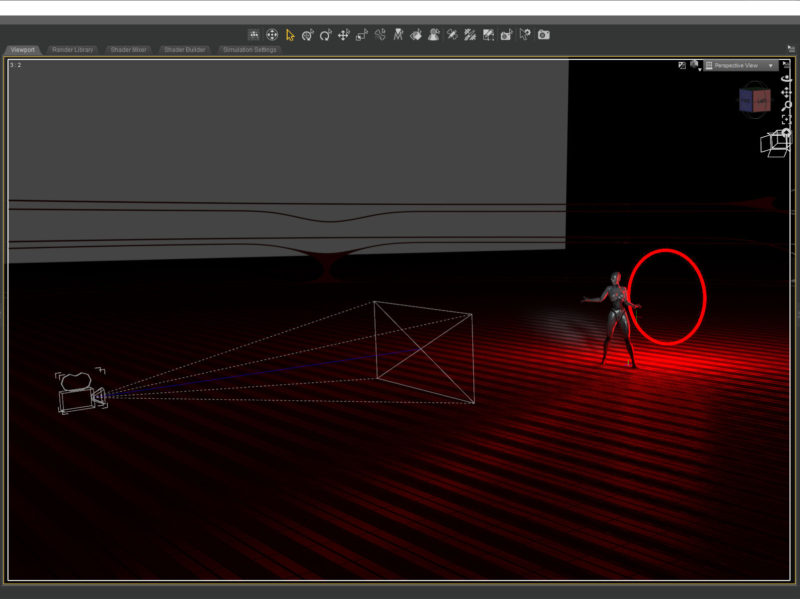

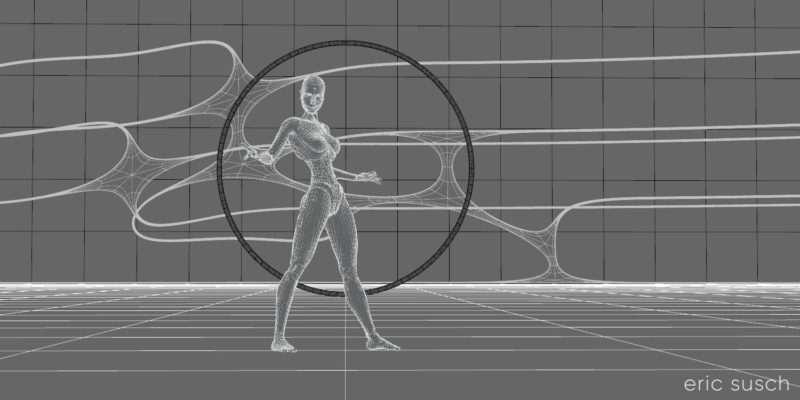

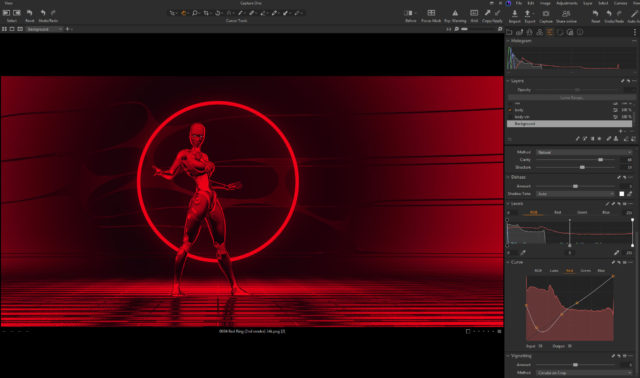

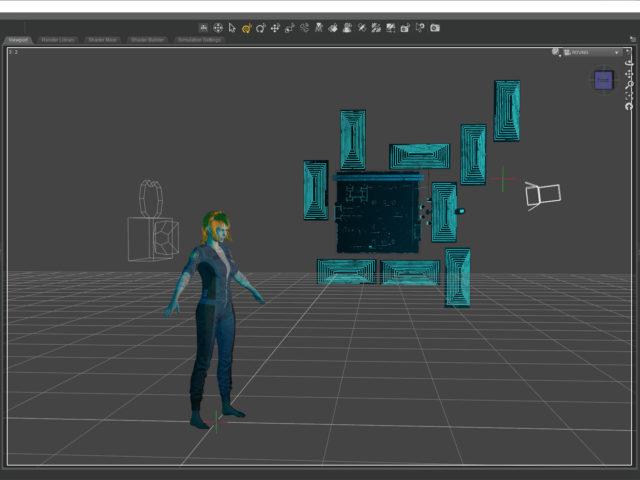

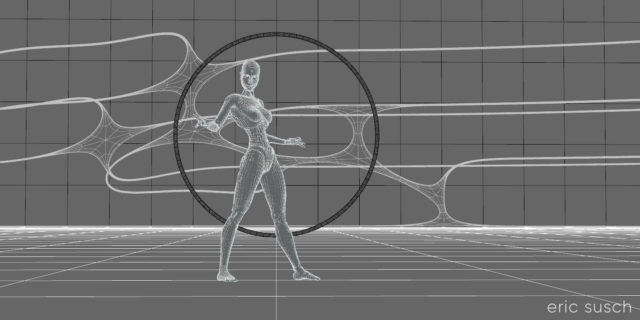

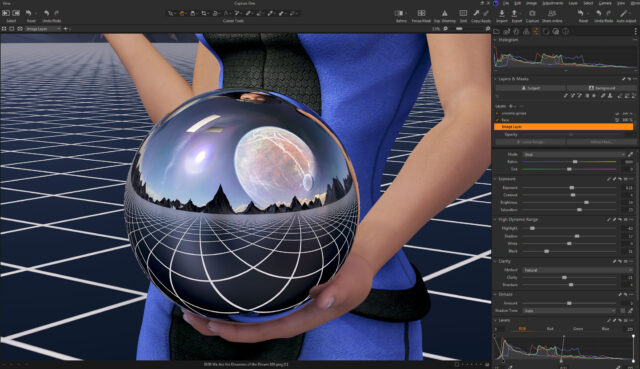

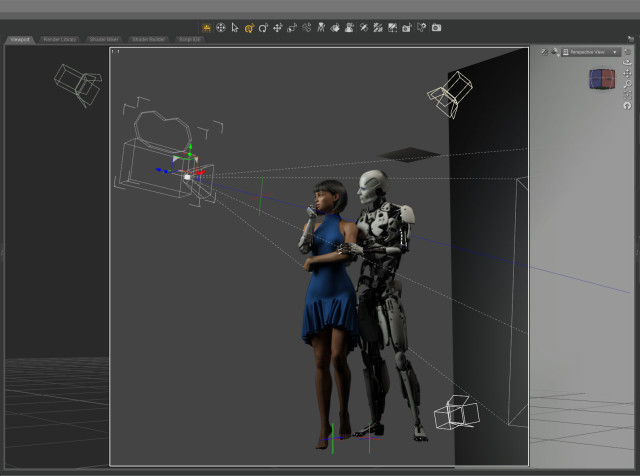

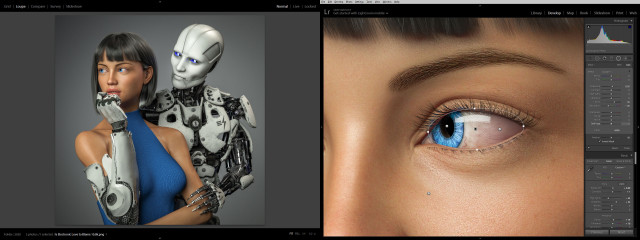

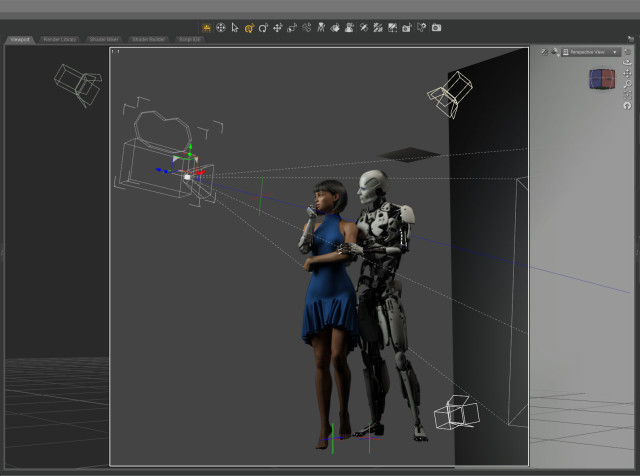

This is an in-progress test render from early on. As you can see the original composition was wider. The plan was to have the android’s right arm on her waist and she would be gently touching his metal fingers.

This is an in-progress test render from early on. As you can see the original composition was wider. The plan was to have the android’s right arm on her waist and she would be gently touching his metal fingers.

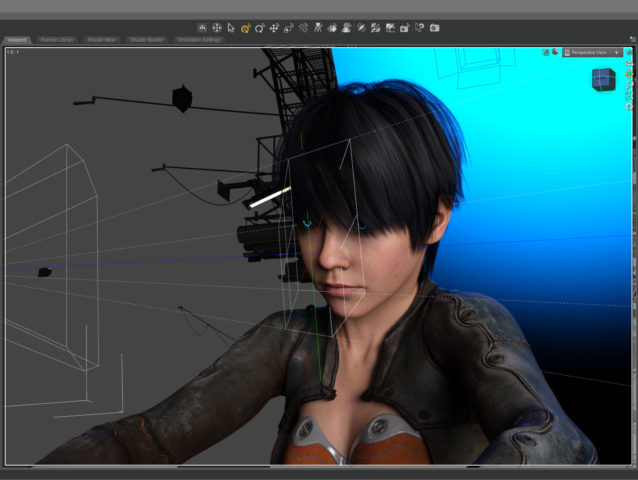

What to wear and what hair? I obsessed over endless choices.

What to wear and what hair? I obsessed over endless choices.

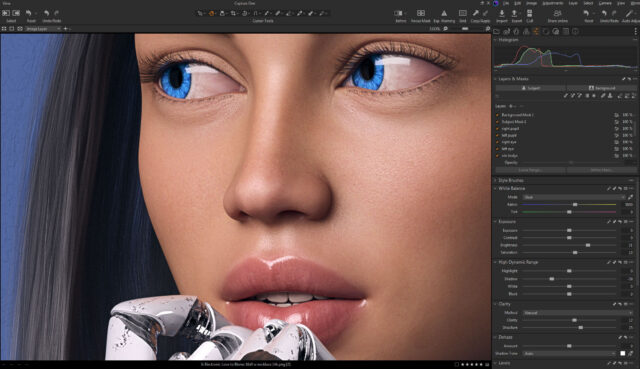

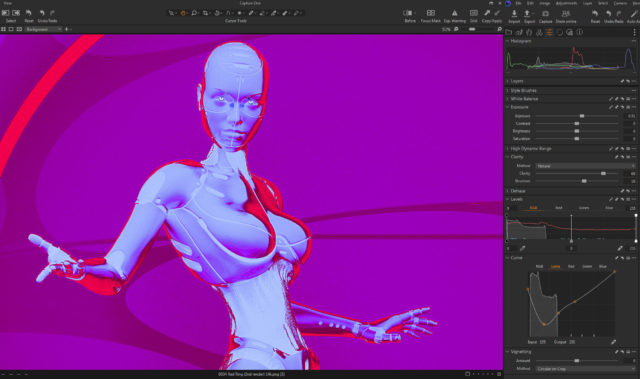

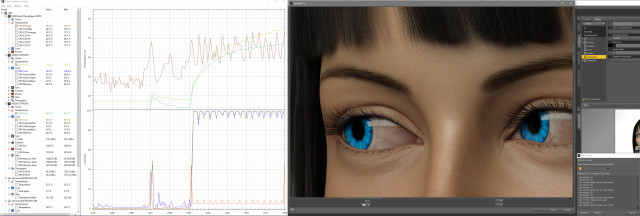

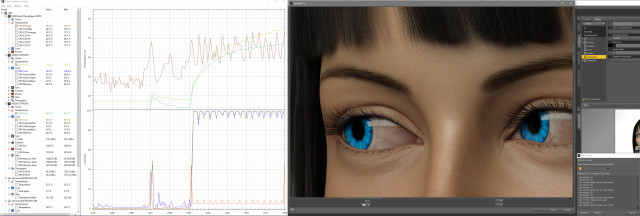

I tried many skin textures for the girl. I wanted to get the softness just right so it would contrast nicely with the hard metal of the android.

I tried many skin textures for the girl. I wanted to get the softness just right so it would contrast nicely with the hard metal of the android.

Maybe she should be an alien? Blue is the accent color so it makes sense. OK, maybe it’s too dark…

Maybe she should be an alien? Blue is the accent color so it makes sense. OK, maybe it’s too dark…

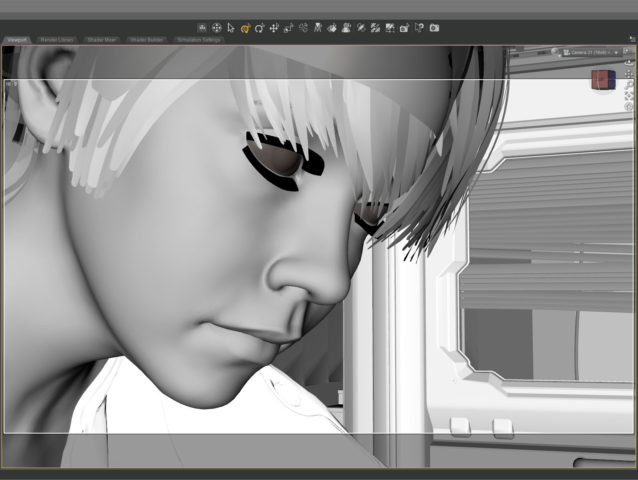

Angry robot face changed to gentle face. I needed to get some humanity in this android.

Angry robot face changed to gentle face. I needed to get some humanity in this android.

I eventually decided the girl needed bare shoulders to clearly see the cybernetic arm connection. I wanted it to be clear that she was human and only her arm was mechanical. This is also the reason I decided to ditch the idea of “space girl” type clothing which tends to be aggressive and hard. She needed to be soft, the soft spot between the hard metal of her arm and the android.

I eventually decided the girl needed bare shoulders to clearly see the cybernetic arm connection. I wanted it to be clear that she was human and only her arm was mechanical. This is also the reason I decided to ditch the idea of “space girl” type clothing which tends to be aggressive and hard. She needed to be soft, the soft spot between the hard metal of her arm and the android.

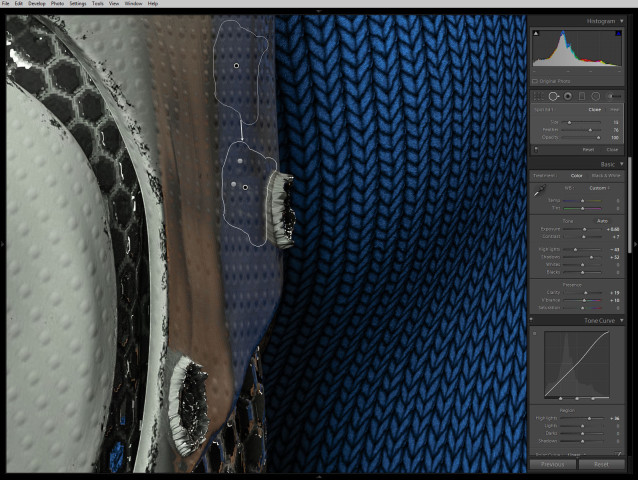

I finally decided to go with this “cold shoulder” dress. When I was working to make it blue, I changed the original cloth to a knit fabric because my wife CAT is a knitter. That just made sense to me.

I finally decided to go with this “cold shoulder” dress. When I was working to make it blue, I changed the original cloth to a knit fabric because my wife CAT is a knitter. That just made sense to me.

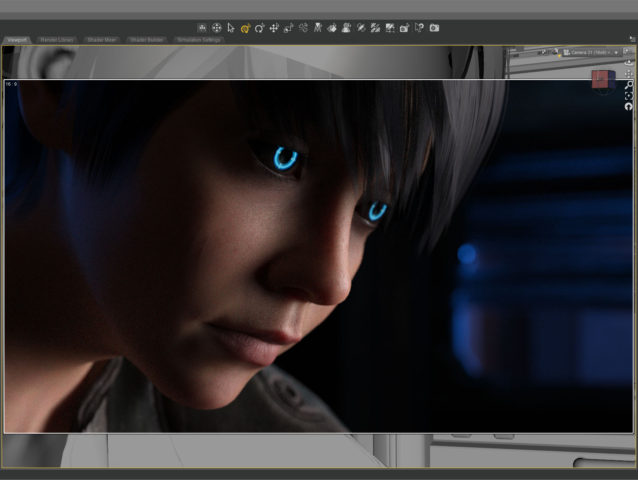

Eventually I realized that I had set the camera too far away, and moved in closer. This always happens. It’s always better after I move in. It’s just part of my process I guess.

Eventually I realized that I had set the camera too far away, and moved in closer. This always happens. It’s always better after I move in. It’s just part of my process I guess.

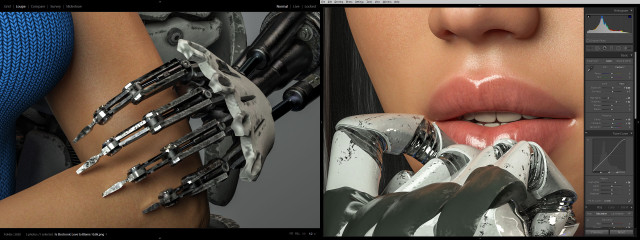

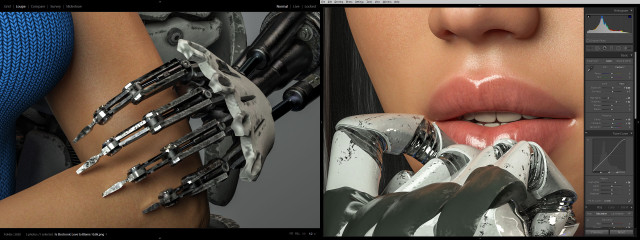

Adjusting for the new composition, I tried moving the robots right hand up to her shoulder. It ended up too creepy though. Trying to get the sharp metal fingers to show some sensitivity was proving difficult. It also fouled up the clean skin / machine connection I wanted for her cybernetic arm. I eventually moved the android’s right hand behind her back out of sight and concentrated on getting the left hand in the correct position. It took me three tries to get the left arm to look relaxed and gentle.

I also spent a tremendous amount of time trying to get the android fingers positioned just right so that they didn’t look like they were gouging the girl’s arm, yet at the same time, catching the light in a nice way. Skin against machine was becoming a major theme apparently. Same with the cybernetic fingers and her lips. I actually moved the camera and lengthened the girl’s neck at one point so you could see more of her mouth.

I also spent a tremendous amount of time trying to get the android fingers positioned just right so that they didn’t look like they were gouging the girl’s arm, yet at the same time, catching the light in a nice way. Skin against machine was becoming a major theme apparently. Same with the cybernetic fingers and her lips. I actually moved the camera and lengthened the girl’s neck at one point so you could see more of her mouth.

Then, of course I second guessed myself and pulled the shot back to re-visit the original concept of the hand around the waist. Worked on that for awhile but thankfully came to my senses. Maybe I’ll revisit this wider shot if I do a different version with a vertical aspect ratio.

Then, of course I second guessed myself and pulled the shot back to re-visit the original concept of the hand around the waist. Worked on that for awhile but thankfully came to my senses. Maybe I’ll revisit this wider shot if I do a different version with a vertical aspect ratio.

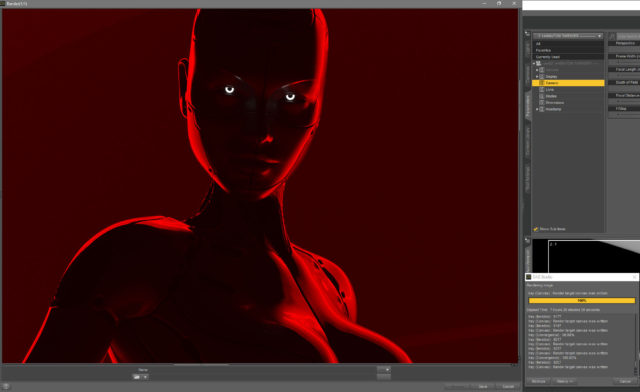

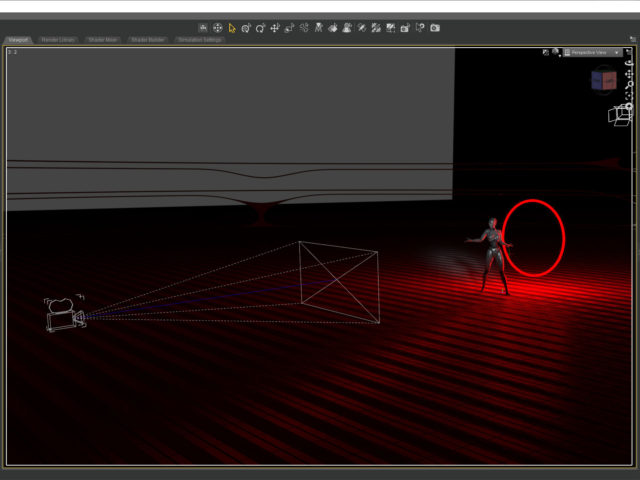

I only needed three lights to illuminate the scene. A key from the front doing most of the work. A hair light from the top that was also doubling as a fill light. And a spot on the gray background plane. I created another tiny plane just out of frame above the android to cut down the reflection on his white bald head.

I only needed three lights to illuminate the scene. A key from the front doing most of the work. A hair light from the top that was also doubling as a fill light. And a spot on the gray background plane. I created another tiny plane just out of frame above the android to cut down the reflection on his white bald head.

The final Iray render took about two hours at 10800 x 10800 resolution. I was surprised. That’s very fast. I’ve had renders at this resolution go ten hours or more. I’m guessing the plain background and the overall brightness of the scene helped.

The final Iray render took about two hours at 10800 x 10800 resolution. I was surprised. That’s very fast. I’ve had renders at this resolution go ten hours or more. I’m guessing the plain background and the overall brightness of the scene helped.

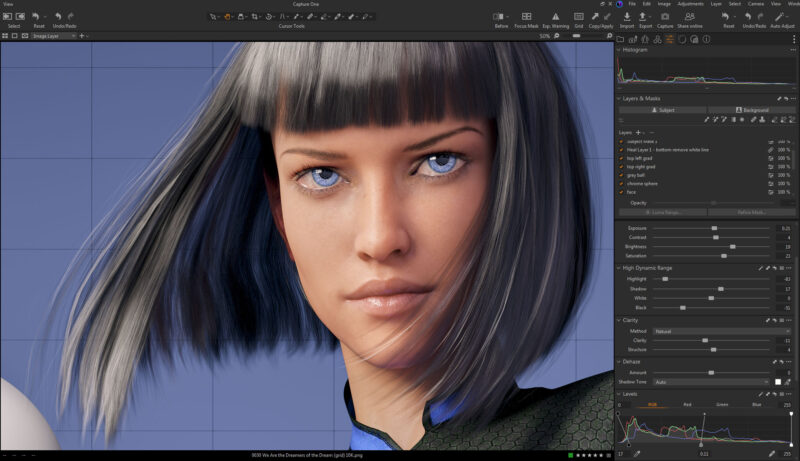

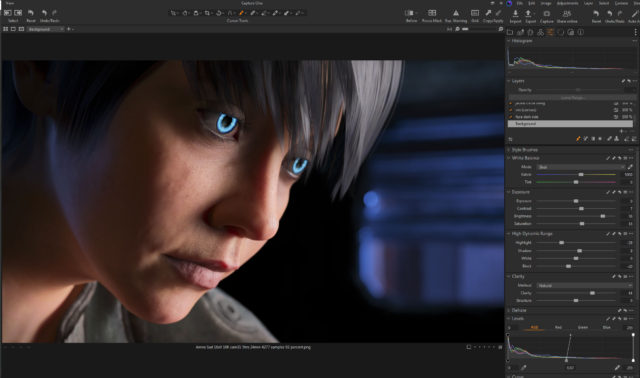

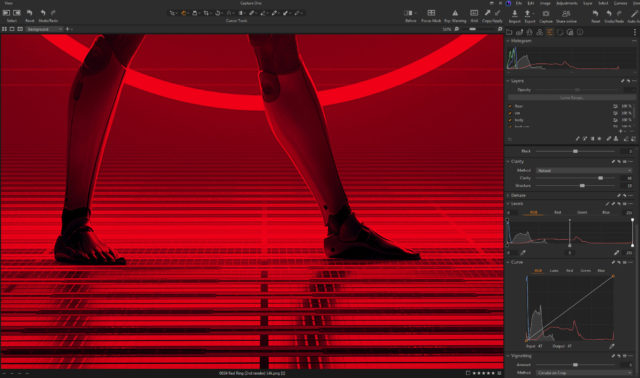

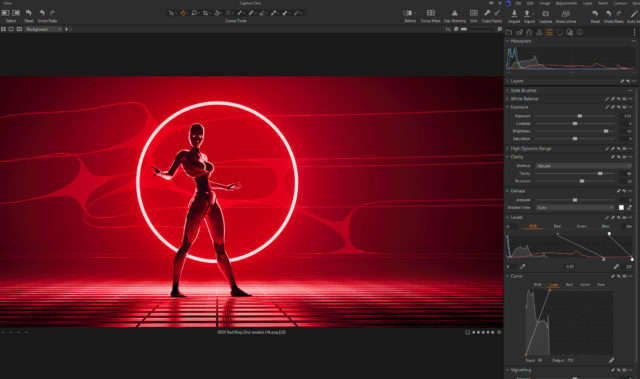

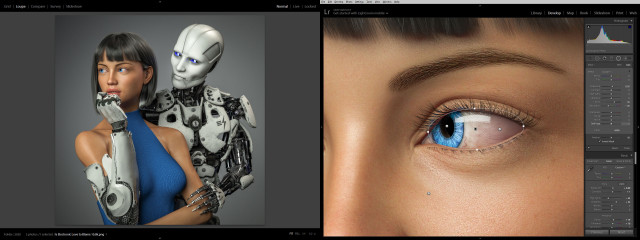

Color correcting in Lightroom I tried to bring out the hardness of the machine and the softness of the skin.

Color correcting in Lightroom I tried to bring out the hardness of the machine and the softness of the skin.

I lightened up the girl’s eyes and obsessed over everything for quite some time. Overall I brightened everything up and made it punch as much as possible.

I lightened up the girl’s eyes and obsessed over everything for quite some time. Overall I brightened everything up and made it punch as much as possible.

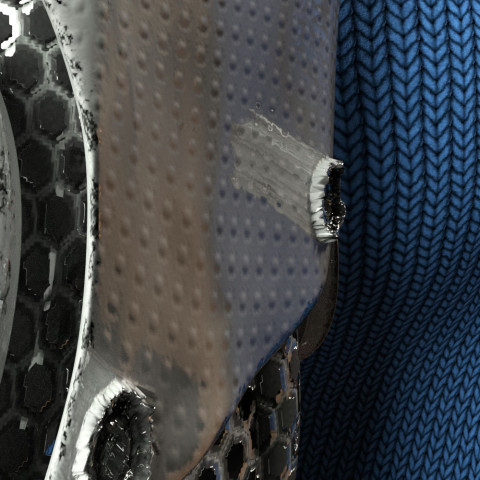

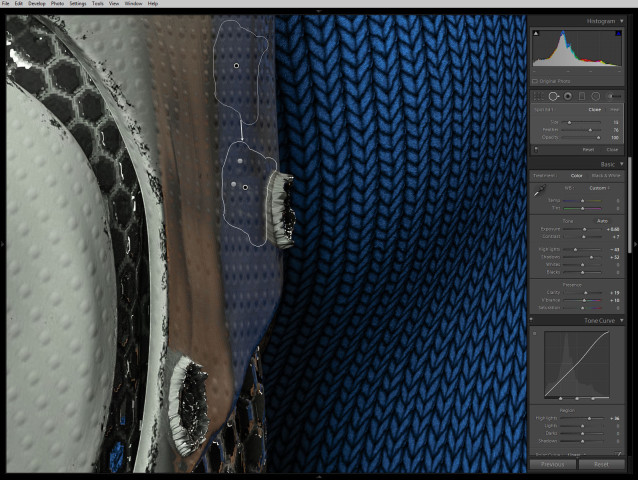

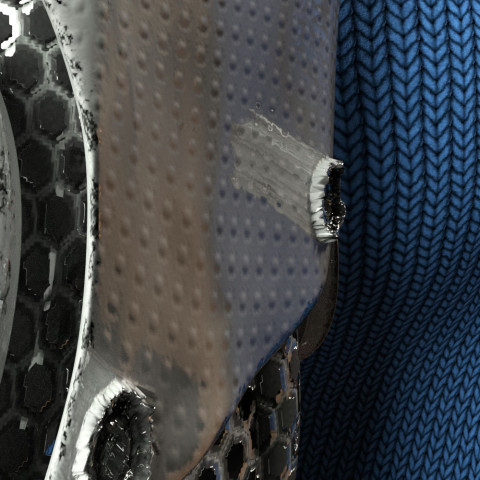

While color correcting I noticed a bizarre reflection coming off one of the poorly formed low-rez “screws” on the cybernetic arm. It had something to do with the normal map which wasn’t doing much on this surface. The screws were created with the displacement map. Not sure what was going on.

While color correcting I noticed a bizarre reflection coming off one of the poorly formed low-rez “screws” on the cybernetic arm. It had something to do with the normal map which wasn’t doing much on this surface. The screws were created with the displacement map. Not sure what was going on.

Anyway, I couldn’t figure out how to fix it in DAZ Studio without changing the character of the rest of the arm surface so I just used the spot remover in lightroom.

Anyway, I couldn’t figure out how to fix it in DAZ Studio without changing the character of the rest of the arm surface so I just used the spot remover in lightroom.

So what do you think? Did I over think it and create something stilted? Or did I continually refine it and make it great? I can’t tell anymore.

So what do you think? Did I over think it and create something stilted? Or did I continually refine it and make it great? I can’t tell anymore.

Next step: print it and see what it looks like on the wall…

Created in DAZ Studio 4.12

Rendered with Iray

Color Correction in Lightroom

That’s because the clothing mesh is getting confused between the arm and the torso which are colliding. I was able to grab the clothing mesh with a DAZ Studio plugin and pull it back toward the torso. I actually had to stretch it quite a ways into the center of the character like a rubber band to get this small area to look better.

That’s because the clothing mesh is getting confused between the arm and the torso which are colliding. I was able to grab the clothing mesh with a DAZ Studio plugin and pull it back toward the torso. I actually had to stretch it quite a ways into the center of the character like a rubber band to get this small area to look better. These changes were relatively small but I think they make a big difference. Can’t wait to see this new version printed out.

These changes were relatively small but I think they make a big difference. Can’t wait to see this new version printed out. Created in DAZ Studio 4.22

Created in DAZ Studio 4.22