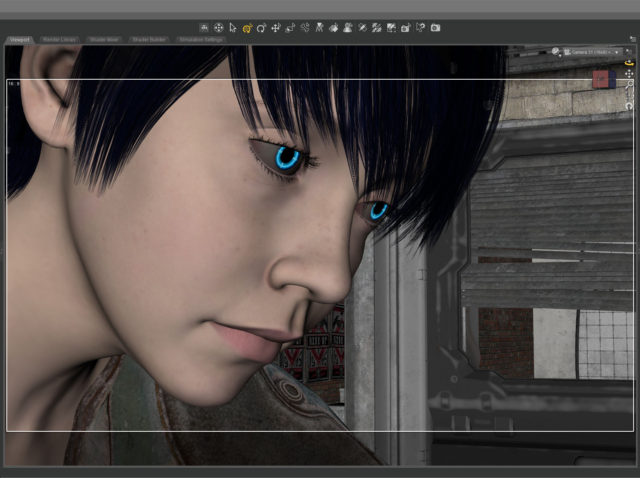

I’m still trying to make some of my CGI art look like it’s from a motion picture. What makes something look cinematic? Color? Framing? I’m still not sure. That’s what I was experimenting with in this portrait – a real person, in a real location, in a movie… A simple moment from a larger scene.

I’m still trying to make some of my CGI art look like it’s from a motion picture. What makes something look cinematic? Color? Framing? I’m still not sure. That’s what I was experimenting with in this portrait – a real person, in a real location, in a movie… A simple moment from a larger scene.

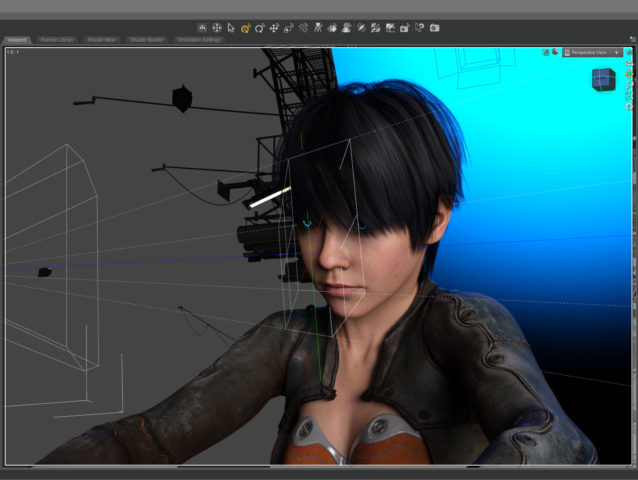

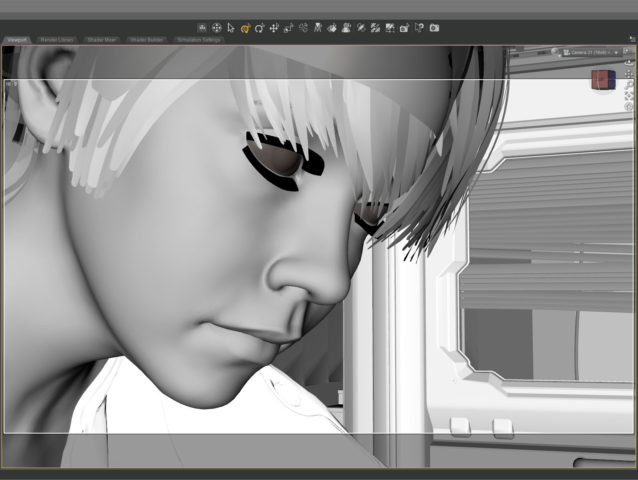

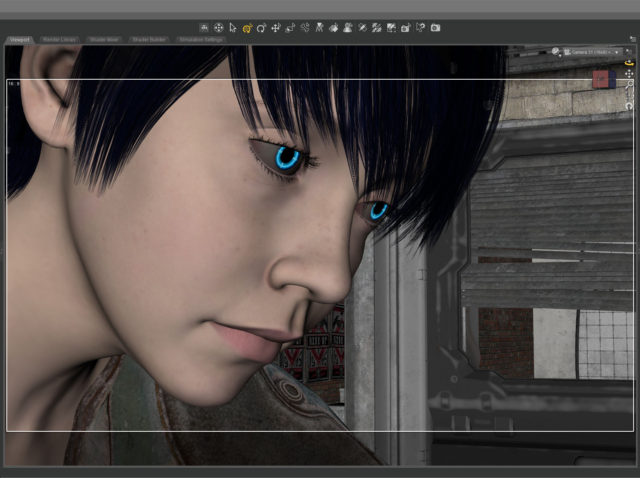

The setup was simple: face, hair, jacket, background. I set the camera lens at 100mm, 16×9 aspect ratio and found a good closeup. I messed with the depth of field quite a bit to get the background soft but not too soft (this isn’t a DSLR movie.)

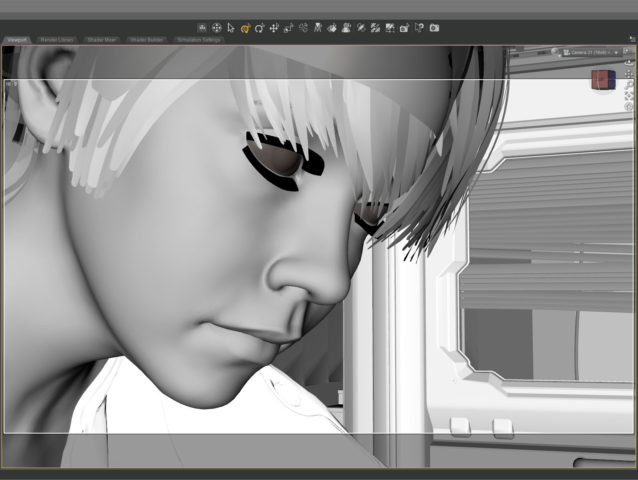

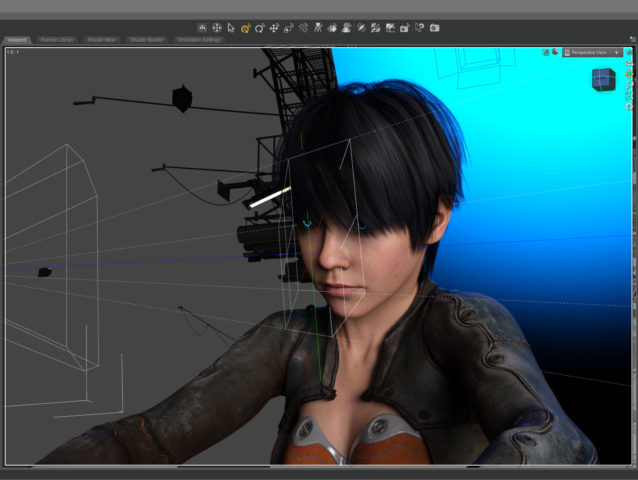

The green line in this screenshot shows how the camera (on the left) is focused precisely on the nearest eye and the two planes show the narrow depth of field on the face. The other eye is slightly out f focus.

The green line in this screenshot shows how the camera (on the left) is focused precisely on the nearest eye and the two planes show the narrow depth of field on the face. The other eye is slightly out f focus.

The blue in the background is the soft blue backlight. I used only three lights, a key light on the face, the back light, and a light in the window. (And the eyes light up too.) No fill light.

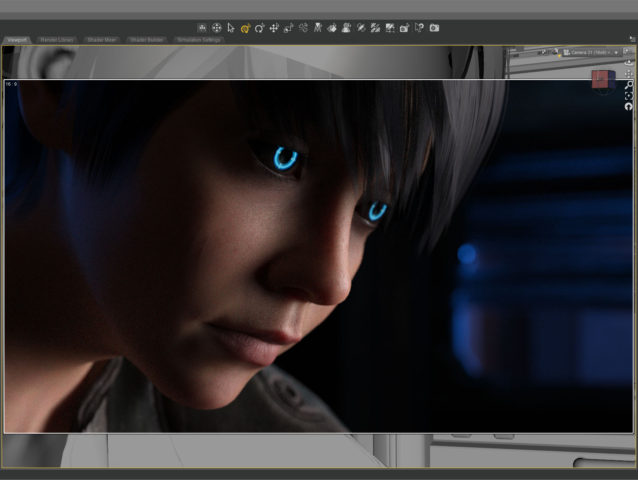

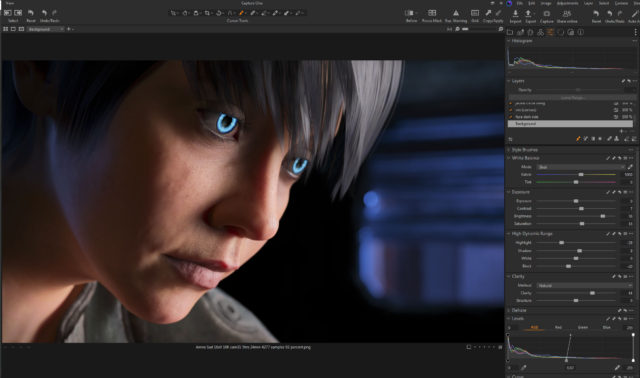

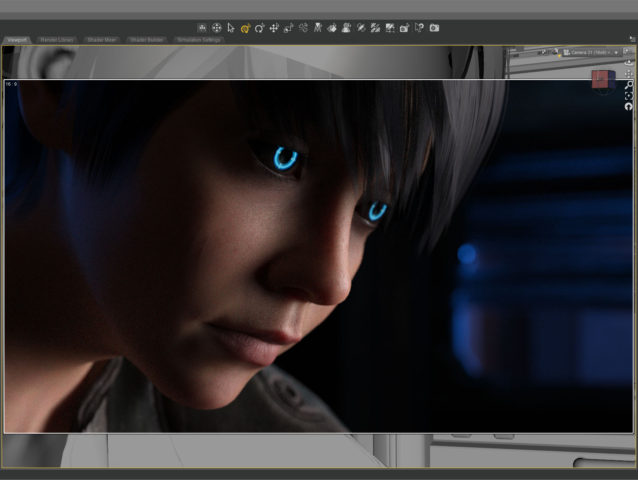

This screenshot shows how the initial render looked before color correction. It’s quite dark which means it takes longer to render but I liked the quality of light so I went for it. It took about five and a half hours to render the final file at 10800 x 6075. I stopped it at 4277 samples and 92 percent convergence even though my minimum is usually 95 percent and/or 5000 samples. It didn’t look like baking it any more would make a difference.

This screenshot shows how the initial render looked before color correction. It’s quite dark which means it takes longer to render but I liked the quality of light so I went for it. It took about five and a half hours to render the final file at 10800 x 6075. I stopped it at 4277 samples and 92 percent convergence even though my minimum is usually 95 percent and/or 5000 samples. It didn’t look like baking it any more would make a difference.

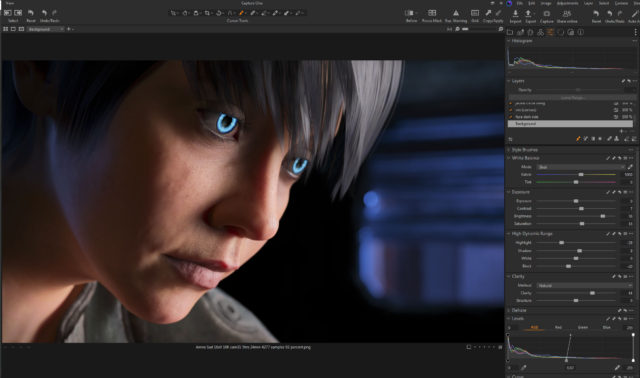

The whites of the eyes ended up quite dark in the render so I brightened them up in post. The eyes are a really old product and I don’t think I updated the reflectivity on the sclera quite right to render properly in iray.

The whites of the eyes ended up quite dark in the render so I brightened them up in post. The eyes are a really old product and I don’t think I updated the reflectivity on the sclera quite right to render properly in iray.

I also pulled the background completely black because I thought the muddy dark shapes distracted from the face.

I also pulled the background completely black because I thought the muddy dark shapes distracted from the face.

This is the part of the post that I feel I really should evaluate the final result… then I decide not to say anything because I can only see the mistakes. After a few months not looking at it, I’m sure I’ll be able to figure out if I love it or hate it, but not now…

Created in DAZ Studio 4.21

Rendered with Iray

Color Correction in Capture One

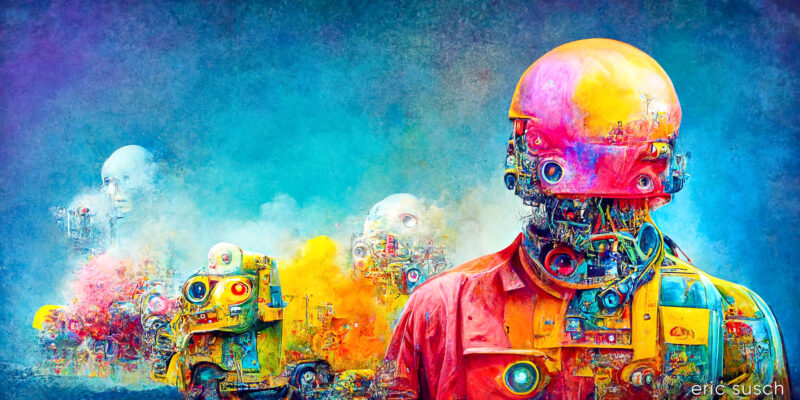

I was looking back at all the images I rendered with Midjourney over the last year and a half. There’s some good stuff in there that got overlooked. I actually rendered this last year (2022) with Midjourney version 3.

I was looking back at all the images I rendered with Midjourney over the last year and a half. There’s some good stuff in there that got overlooked. I actually rendered this last year (2022) with Midjourney version 3.