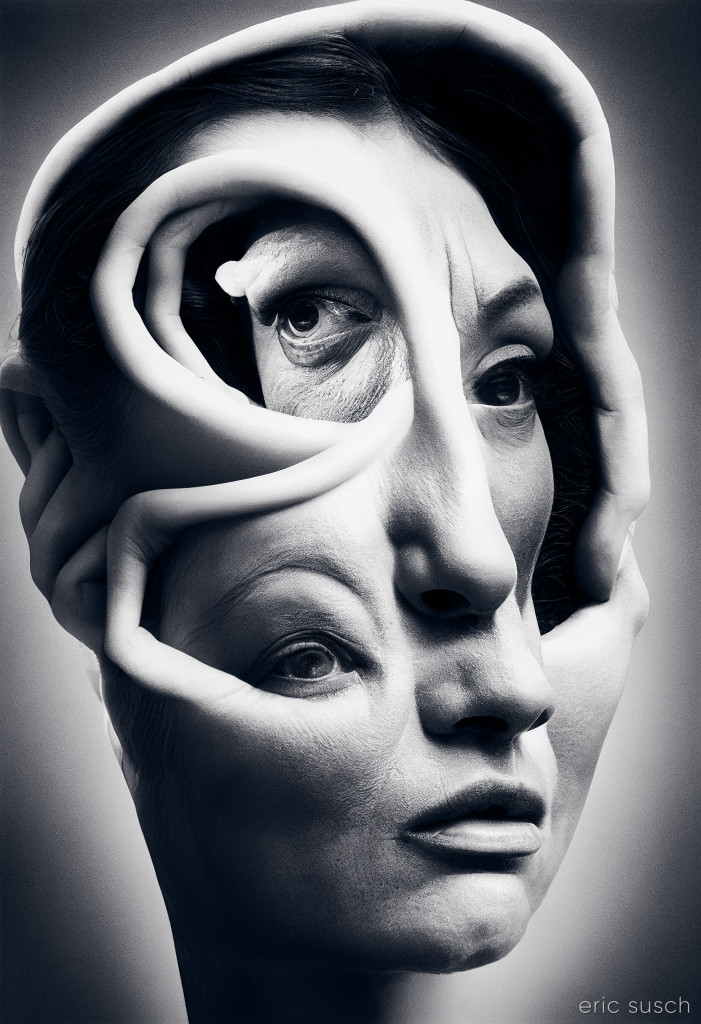

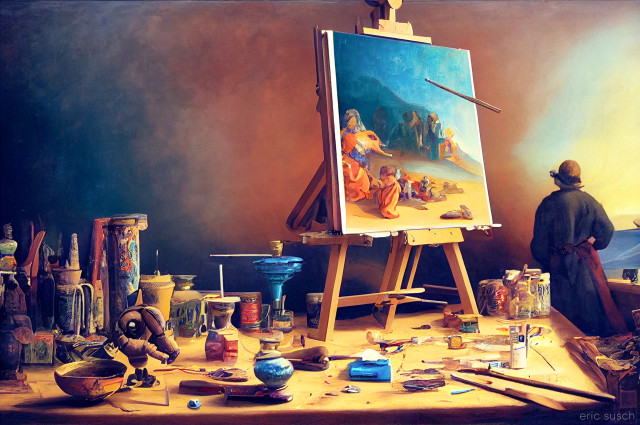

#Art I made with #Midjourney #AI

Author Archives: eric susch

Waiting for instructions…

Wesley Crusher riding a unicorn into battle

Last month actor and writer Wil Wheaton who played Wesley Crusher on Star Trek: the Next Generation, got a rare Dall-E invite, and used “Wesley Crusher riding a unicorn into battle” as his first prompt, “because OBVIOUSLY.” You can see what he got here on his tumbler.

I decided to see if the Midjourney art machine was up to the same challenge. After several tries with the Beta –test renderer that weren’t very good, I tried the good ol’ V3 engine and finally got this image. It almost looks like a Star Trek uniform. It almost looks like Wesley Crusher. …And he almost has legs. It’s wonkey and creepy but we like that, ‘cuz that’s the way AI art should be. A few years from now when AI art is perfectly realistic, artists will attempt to re-create this wonky style just like Instagram filters today try to make your digital pictures look like old fashioned faded film prints. Because realism is nice but interesting is better…

Midjourney “thinks” and creates a portrait of a cyborg with an electronic brain

We Are All Connected

Rainbow Sky

AI-generated images BANNED!

From Ars Technica:

Confronted with an overwhelming amount of artificial-intelligence-generated artwork flooding in, some online art communities have taken dramatic steps to ban or curb its presence on their sites, including Newgrounds, Inkblot Art, and Fur Affinity, according to Andy Baio of Waxy.org.

Baio, who has been following AI art ethics closely on his blog, first noticed the bans and reported about them on Friday. So far, major art communities DeviantArt and ArtStation have not made any AI-related policy changes, but some vocal artists on social media have complained about how much AI art they regularly see on those platforms as well.

Getty images has also banned AI generated artwork from their site. From The Verge:

Getty Images has banned the upload and sale of illustrations generated using AI art tools like DALL-E, Midjourney, and Stable Diffusion. It’s the latest and largest user-generated content platform to introduce such a ban, following similar decisions by sites including Newgrounds, PurplePort, and FurAffinity.

Getty Images CEO Craig Peters told The Verge that the ban was prompted by concerns about the legality of AI-generated content and a desire to protect the site’s customers.

I’m not sure banning AI Art is possible. Right now, for myself, I’m experimenting just to see what this new technology will come up with. If the rendered output is less than perfect I just leave it as-is. It’s a technology artifact meant to document the state of the art. As a result it’s easy to spot one of my AI Art pieces especially if a character in the picture has six fingers or creepy eyes.

Other artists continue perfecting their work in Photoshop etc. Depending on their process the final piece may only be partially AI generated. How are these bans going to work? How can a site tell which tools you’ve used to create a digital piece? The genie is out of the bottle. We can’t go back.

My Cyborg Friend

Today I downloaded Stable Diffusion which is an open text-to-image technology from Stability.ai that can render images on your own computer. You need a heavy duty graphics card but it’s free to use. The technology is open and many other start-ups are incorporating it into their systems including Midjourney which I have been very impressed with.

It’s early days and some of the installation methods are technically complicated (“step one: install Python programing language…” etc.) but I found a user interface project – that

My initial impression is that it’s not as good as Midjourney, not as artistic or flexible. The resolution is lower, 1024 x 1024 is the largest available in the drop down menu, at least in the GUI that I installed. I’ve also discovered that it has a tendency to create double headed characters if you use any resolution over 512 x 512. Apparently the AI was trained at that resolution and if you try something larger it tries to fill the space by duplicating things. Like I said, it’s early days.

My initial impression is that it’s not as good as Midjourney, not as artistic or flexible. The resolution is lower, 1024 x 1024 is the largest available in the drop down menu, at least in the GUI that I installed. I’ve also discovered that it has a tendency to create double headed characters if you use any resolution over 512 x 512. Apparently the AI was trained at that resolution and if you try something larger it tries to fill the space by duplicating things. Like I said, it’s early days.

I’m still very impressed though. I’ve only tried copying and pasting some of my midjourney prompts which were of course optimized for a different system. I have yet to spend time figuring out all the controls and I’m still getting some interesting images. They render fast, within a minute or two on my system which has nVidia Titan RTX graphics cards.

More to come…

Wet city street film noir “remaster”

#Art I made with #Midjourney #AI

Midjourney remaster feature

Midjourney introduced a “remaster” feature today.

We want to try letting people ‘remaster’ images from the v1/v2/v3 algorithm using the ‘coherence’ of the –test algorithm.

Here’s a comparison using one of my first successful renders with midjourney. Top is the original rendered with V3 algorithm and the bottom is the new remaster.

There’s been debate about the new “–test” and “–testp” algorithms verses the older versions. The new algorithm emphasizes “coherence” and is more realistic but less “artistic.” I think a blind rush to “realism” is a mistake because images start to look like boring cell phone images, but this new algorithm at Midjourney looks pretty damn good. I like both images here. They are quite different but both are dramatic in they’re own way.

There’s been debate about the new “–test” and “–testp” algorithms verses the older versions. The new algorithm emphasizes “coherence” and is more realistic but less “artistic.” I think a blind rush to “realism” is a mistake because images start to look like boring cell phone images, but this new algorithm at Midjourney looks pretty damn good. I like both images here. They are quite different but both are dramatic in they’re own way.