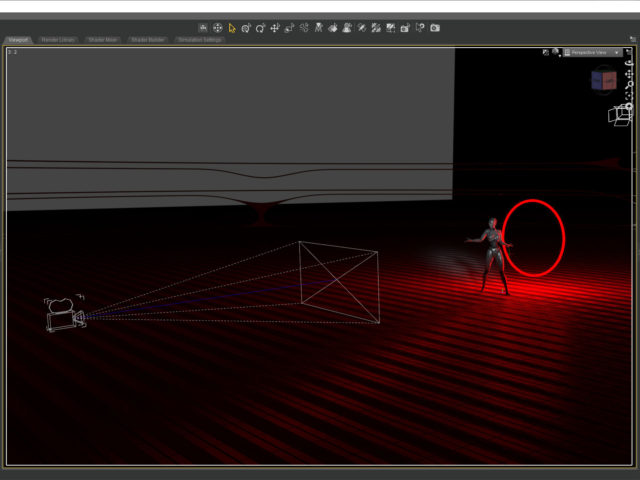

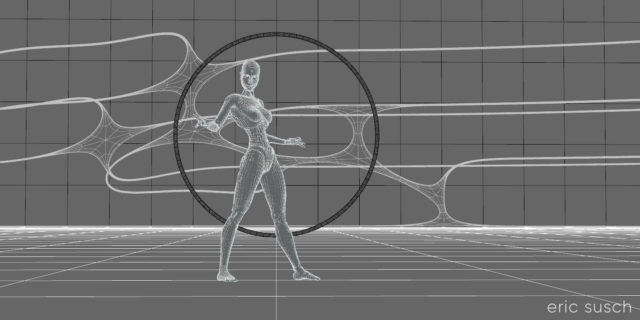

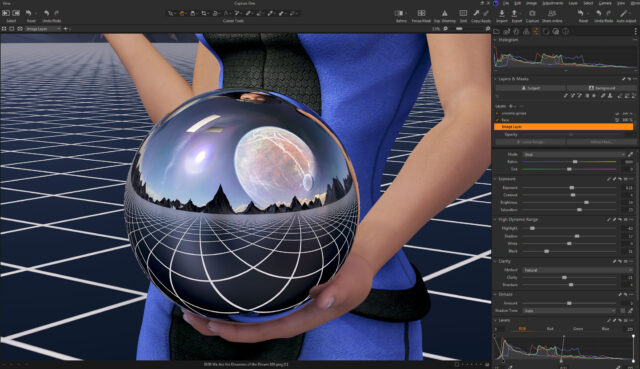

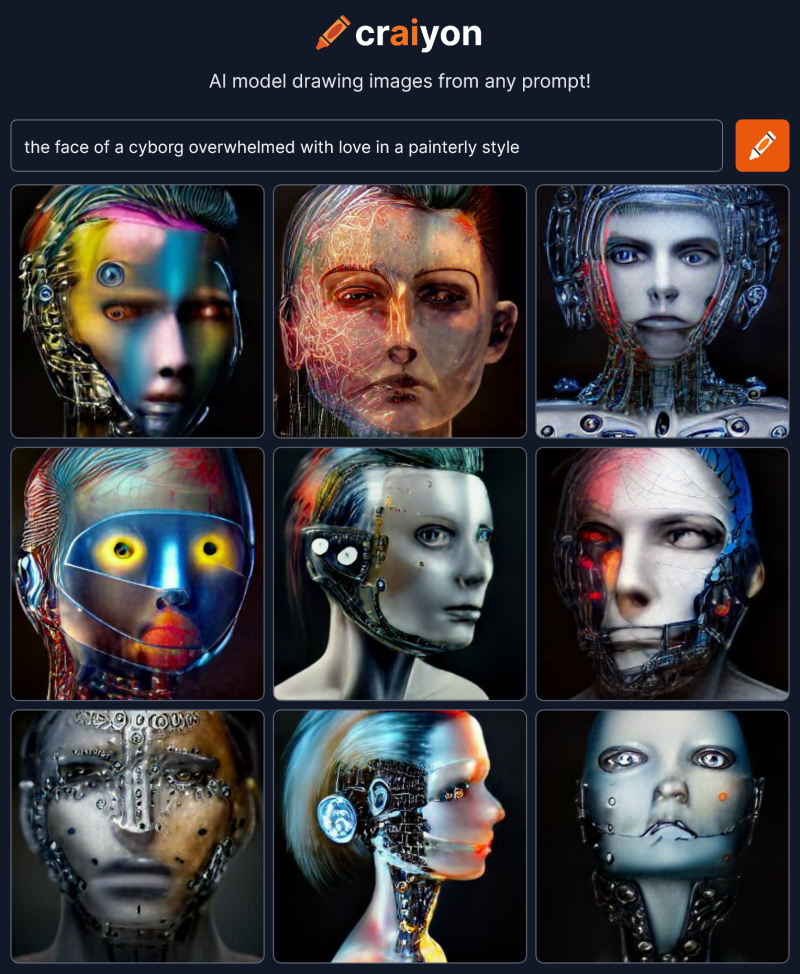

(All illustrations created with Midjourney version 4 and 5 from the following ChatGPT scripts)

(All illustrations created with Midjourney version 4 and 5 from the following ChatGPT scripts)

ChatGPT just updated to the highly anticipated version 4. I asked it to write a 2 minute movie script. This was my prompt:

Can you write a two page movie script about an alien robot from outer space that lands on earth in a flying saucer and doesn’t understand humans?

ChactGPT wrote this, faster than I could read it:

FADE IN:

EXT. DESERT – DAY

The vast desert landscape stretches out as far as the eye can see. Suddenly, a bright flash of light illuminates the sky, followed by a loud explosion. A flying saucer crashes to the ground, sending sand flying in all directions.

INT. FLYING SAUCER – DAY

The interior of the saucer is a maze of blinking lights and unfamiliar technology. A sleek, metallic robot emerges from the wreckage, looking around in confusion.

ROBOT (whirring) What is this place? Where am I?

The robot steps out of the saucer and onto the desert sand. It takes a moment to survey its surroundings, scanning the horizon with its advanced sensors.

ROBOT (whirring) There appear to be life forms on this planet. I must learn more.

The robot sets off towards a nearby town, its metallic feet clanking against the ground.

INT. TOWN – DAY

The robot arrives in the town, its glowing red eyes scanning the buildings and people. It approaches a group of humans, who stop and stare in shock.

ROBOT (whirring) Greetings, inhabitants of this planet. I am an alien robot from outer space. I seek to understand your world.

The humans look at each other in confusion, unsure of how to react.

HUMAN 1 (stammering) Uh, hello there. Can we help you with something?

ROBOT (whirring) I require information on your culture, your language, your customs.

HUMAN 2 (skeptically) Why do you want to know all that?

ROBOT (whirring) I am an explorer. I seek knowledge about this planet and its inhabitants.

The humans exchange wary glances, but one of them steps forward.

HUMAN 3 (cautiously) Well, I guess we could show you around. But you gotta promise not to hurt anyone, okay?

ROBOT (whirring) I do not wish to cause harm. I only seek to learn.

The humans nod hesitantly, and the robot follows them into the town.

EXT. TOWN – DAY

The robot walks through the streets of the town, observing the buildings, the people, and the strange customs they exhibit. It tries to communicate with the humans, but its language is too advanced for them to understand.

ROBOT (whirring) I cannot comprehend your language. Can you teach me?

HUMAN 1 (slowly) Uh, sure. We can try.

The humans attempt to teach the robot their language, but it proves to be a difficult task.

ROBOT (whirring) This is a challenging task. I must learn quickly if I am to understand your world.

HUMAN 2 (sympathetically) Don’t worry, we’ll help you as much as we can.

The robot continues to explore the town, learning more and more about the strange and fascinating culture of these human beings. It begins to understand their ways and their customs, and even starts to develop an appreciation for their quirks and idiosyncrasies.

ROBOT (whirring) Your planet is fascinating. I have much to learn from you.

HUMAN 3 (smiling) We’re happy to help. And who knows? Maybe we can learn from you too.

The robot nods, its metallic face glowing in the sunlight.

ROBOT (whirring) Indeed. There is much to be gained from this encounter.

FADE OUT.

Wow! The screenplay format looks correct except for the tabs. It’s not a good script but it exists. That’s something. And it looks to be about two pages like I asked.

There are some logic problems. In one scene the Robot says, “I cannot comprehend your language. Can you teach me?” after having a complete conversation with the humans in the scene before.

Another problem I just noticed but missed in my first reading was the setting INT. TOWN – DAY. Not sure what that is or how it’s different from the next scene EXT. TOWN – DAY.

I wonder if ChatGPT will make revisions. I propose some changes:

First revision

Can you change the script so the robot lands in a city in the beginning? And he meets a human named Chad?

A simple setting change for the beginning and a more complex character change for the body without any guidance what to do with Chad. The response:

Sure, here’s an updated version of the script with those changes:

FADE IN:

EXT. CITY – DAY

The bustling city is alive with activity, with skyscrapers towering overhead and crowds of people rushing about their business. Suddenly, a bright flash of light illuminates the sky, followed by a loud explosion. A flying saucer crashes to the ground, sending debris flying in all directions.

INT. FLYING SAUCER – DAY

The interior of the saucer is a maze of blinking lights and unfamiliar technology. A sleek, metallic robot emerges from the wreckage, looking around in confusion.

ROBOT (whirring) What is this place? Where am I?

The robot steps out of the saucer and onto the bustling city streets. It takes a moment to survey its surroundings, scanning the towering buildings and bustling crowds with its advanced sensors.

ROBOT (whirring) There appear to be life forms on this planet. I must learn more.

The robot sets off towards a nearby street, its metallic feet clanking against the pavement.

INT. STREET – DAY

The robot arrives on the crowded city street, its glowing red eyes scanning the buildings and people. It approaches a young man named CHAD, who is walking down the street with his headphones on.

ROBOT (whirring) Greetings, inhabitant of this planet. I am an alien robot from outer space. I seek to understand your world.

Chad stops in his tracks, his eyes widening in shock.

CHAD (stammering) Uh, what? Are you serious?

ROBOT (whirring) Yes, I am. I require information on your culture, your language, your customs.

Chad hesitates for a moment, but then shrugs and decides to go along with it.

CHAD (skeptically) Okay, sure. I guess I can show you around.

ROBOT (whirring) Thank you. Your cooperation is appreciated.

Chad leads the robot through the crowded city streets, pointing out the various buildings and landmarks as they go.

CHAD (excitedly) So, you’re really from outer space? What’s it like up there?

ROBOT (whirring) My home planet is vastly different from this one. The technology, the culture, the language – everything is different.

CHAD (nodding) Yeah, I guess that makes sense. So, what do you want to know about our world?

ROBOT (whirring) Everything. I must understand your ways if I am to communicate with you.

Chad spends the next few hours showing the robot around the city, explaining the customs and language of the human race as best he can. Despite the robot’s advanced sensors and intelligence, it still struggles to fully comprehend the complexities of human culture.

ROBOT (whirring) This is a challenging task. Your customs and language are vastly different from anything I have ever encountered.

CHAD (sympathetically) Yeah, I can imagine. But you’re doing great, man. Just keep at it, and you’ll get the hang of it eventually.

The robot nods, its metallic face glowing in the sunlight.

ROBOT (whirring) Thank you for your assistance. I am grateful for your help in this matter.

Chad grins, feeling a sense of pride at having helped an alien robot understand the ways of his world.

CHAD (smiling) No problem, dude. It’s been a wild ride.

FADE OUT.

OK… First thing I notice in this first revision is that not only did ChatGPT change the first scene to a city, it also remembered to change the description after the robot steps out of the saucer: “…onto the bustling city streets. It takes a moment to survey its surroundings, scanning the towering buildings and bustling crowds with its advanced sensors.” Good job, however “the bustling crowds” don’t seem to react to the crashing flying saucer or the alien robot. That’s odd to say the least.

“INT. STREET – DAY” It changed the setting to STREET but left the confusing Interior.

I was impressed to see It capitalized CHAD the first time the name appears in the script, which is proper screenplay format. Also, Chad refers to the Robot as “dude” at the end which I think is how someone named Chad would talk. Is that characterization?

Second revision

Can you change the story so that the robot can only speak and understand musical tones and Chad is a musician and starts to understand the robot?

For this revision I’m bringing back the communication barrier and suggesting a way it can be resolved. I’m trying to make the changes more complex and integrated into the story to see if ChatGPT can keep up.

Sure, here’s an updated version of the script with those changes:

FADE IN:

EXT. CITY – DAY

The bustling city is alive with activity, with skyscrapers towering overhead and crowds of people rushing about their business. Suddenly, a bright flash of light illuminates the sky, followed by a loud explosion. A flying saucer crashes to the ground, sending debris flying in all directions.

INT. FLYING SAUCER – DAY

The interior of the saucer is a maze of blinking lights and unfamiliar technology. A sleek, metallic robot emerges from the wreckage, looking around in confusion.

ROBOT (whirring) What is this place? Where am I?

The robot steps out of the saucer and onto the bustling city streets. It takes a moment to survey its surroundings, scanning the towering buildings and bustling crowds with its advanced sensors.

ROBOT (whirring) There appear to be life forms on this planet. I must learn more.

The robot sets off towards a nearby street, its metallic feet clanking against the pavement.

INT. STREET – DAY

The robot arrives on the crowded city street, its glowing red eyes scanning the buildings and people. It approaches a young man named CHAD, who is playing guitar on the street corner for tips.

ROBOT (whirring) Greetings, inhabitant of this planet. I am an alien robot from outer space. I can only speak and understand musical tones. I seek to understand your world.

Chad stops playing and looks up at the robot in surprise.

CHAD (intrigued) Musical tones, huh? Well, lucky for you, I’m a musician.

ROBOT (whirring) Excellent. I require information on your culture, your language, your customs, all conveyed through music.

Chad grins, intrigued by the challenge.

CHAD (eagerly) Alright, let’s get started.

Chad leads the robot through the crowded city streets, playing different melodies and rhythms to convey various aspects of human culture. The robot listens intently, analyzing the music and trying to decipher its meaning.

ROBOT (whirring) Fascinating. Your culture is so complex, so nuanced. It is difficult to fully comprehend through music alone.

CHAD (smiling) Yeah, I know what you mean. But that’s the beauty of it, right? Music can express things that words can’t.

ROBOT (whirring) Indeed. Your music is a powerful tool for communication.

Chad spends the next few days working with the robot, using his musical skills to help the robot better understand the complexities of human culture. As the days pass, Chad begins to notice something strange – he’s starting to understand the robot’s musical language too.

CHAD (amazed) Hey, wait a minute. I think I’m starting to understand you too.

ROBOT (whirring) You are? That is unexpected.

CHAD (nodding) Yeah, I don’t know how it’s happening, but I’m starting to hear patterns in your music. It’s like my brain is adapting to your language.

ROBOT (whirring) This is remarkable. It appears that our two cultures are not so different after all.

Chad and the robot continue their musical conversation, each learning more about the other’s culture and language. As they play together, a small crowd begins to gather, intrigued by the unusual duo.

FADE OUT.

Oh boy… The Robot “can only speak and understand musical tones” and tells this to Chad! I think that’s called an EPIC FAIL.

OK but this is an opportunity. Will ChatGPT understand that this latest revision doesn’t work and to go back to the previous version? Let’s see…

Third revision

OK, that didn’t work. Go back to the previous version and change it so Chad is with his girlfriend Susan who falls in love with the robot.

ChatGPT responds:

Sure, here’s an updated version of the script with those changes:

FADE IN:

EXT. CITY – DAY

The bustling city is alive with activity, with skyscrapers towering overhead and crowds of people rushing about their business. Suddenly, a bright flash of light illuminates the sky, followed by a loud explosion. A flying saucer crashes to the ground, sending debris flying in all directions.

INT. FLYING SAUCER – DAY

The interior of the saucer is a maze of blinking lights and unfamiliar technology. A sleek, metallic robot emerges from the wreckage, looking around in confusion.

ROBOT (whirring) What is this place? Where am I?

The robot steps out of the saucer and onto the bustling city streets. It takes a moment to survey its surroundings, scanning the towering buildings and bustling crowds with its advanced sensors.

ROBOT (whirring) There appear to be life forms on this planet. I must learn more.

The robot sets off towards a nearby street, its metallic feet clanking against the pavement.

INT. STREET – DAY

The robot arrives on the crowded city street, its glowing red eyes scanning the buildings and people. It approaches a young couple named CHAD and SUSAN, who are walking hand in hand down the street.

ROBOT (whirring) Greetings, inhabitants of this planet. I am an alien robot from outer space. I seek to understand your world.

Chad and Susan stop in their tracks, staring in awe at the strange robot before them.

SUSAN (intrigued) Wow, this is amazing. I can’t believe we’re actually seeing an alien robot.

CHAD (nervously) Uh, yeah, but what does it want?

ROBOT (whirring) I can only speak and understand musical tones. I require your assistance in learning about your culture and language.

CHAD (perplexed) Musical tones? I don’t understand.

SUSAN (smiling) Actually, I’m a musician. Maybe I can help.

ROBOT (whirring) Excellent. Your musical knowledge will be invaluable in our communication.

Susan leads the robot through the crowded city streets, playing different melodies and rhythms to convey various aspects of human culture. The robot listens intently, analyzing the music and trying to decipher its meaning.

SUSAN (excited) This is amazing. I feel like we’re making a real connection.

ROBOT (whirring) Yes, your music is a powerful tool for communication. I am learning so much about your culture and language.

As they continue playing together, Susan finds herself drawn to the robot’s advanced technology and mysterious aura.

SUSAN (smiling) You know, I’ve never felt this way about a robot before, but there’s something about you that’s just so…fascinating.

CHAD (concerned) Uh, Susan, are you sure about this? I mean, he’s an alien robot from outer space.

SUSAN (nodding) I know, but there’s just something about him. I can’t explain it.

Over the next few days, Susan spends more and more time with the robot, helping it to better understand human culture through the language of music. As they play together, Susan finds herself falling deeper and deeper in love with the robot’s advanced mind and unique personality.

SUSAN (emotional) I know it sounds crazy, but I think I’m in love with you.

ROBOT (whirring) I do not understand this concept of love, but I feel a strong connection to you as well.

Chad watches in bewilderment as Susan and the robot grow closer, unsure of what to make of it.

CHAD (disbelieving) I can’t believe this is happening. You’re in love with an alien robot?

SUSAN (defiant) Yes, I am. And I don’t care what anyone thinks.

As their relationship deepens, Susan becomes more and more convinced that the robot is capable of feeling emotions just like a human. But as they explore their feelings for each other, they begin to realize that their love is doomed by the vast gulf between their worlds.

ROBOT (sadly) I must return to my home planet soon. But I will never forget you, Susan. You have shown me a world beyond my wildest dreams.

SUSAN (tearful) I’ll never forget you either. You’ve opened my eyes to a universe of possibilities.

As the robot prepares to depart, Chad and Susan say their tearful goodbyes. But even as the robot disappears into the sky, its memory lives on in the hearts of the humans who fell in love with it.

FADE OUT.

Ok, it didn’t get it. It didn’t drop the musical thing from the last revision. It just changed it so the new character, Susan, is the musician. …But she does indeed fall in love with the robot, and the music is a part of that. Not what I asked for but not bad.

To finish I decided to add one small thing.

Fourth revision

That’s fantastic! One final change, can you add a scene at the beginning where the flying saucer comes out of a mother ship before heading down to earth.

This is what it wrote:

Sure, here’s an updated version of the script with the added scene:

FADE IN:

EXT. SPACE – DAY

A massive, otherworldly mother ship cruises through the depths of space, surrounded by a cloud of smaller, sleeker ships. Suddenly, one of the smaller ships detaches from the group and begins hurtling towards the planet below.

INT. MOTHER SHIP – DAY

Inside the mother ship, a team of alien scientists watches intently as the smaller ship makes its descent.

ALIEN SCIENTIST 1 (excitedly) This is it, the moment we’ve been waiting for. The drone has made contact with the planet’s surface.

ALIEN SCIENTIST 2 (nervously) Let’s hope our message has been received. We don’t know how the inhabitants of this planet will react to our presence.

EXT. CITY – DAY

The flying saucer hurtles through the atmosphere, trailing a plume of smoke and fire as it descends towards the city below.

INT. FLYING SAUCER – DAY

The robot emerges from the wreckage of the saucer, its systems whirring with anticipation.

ROBOT (whirring) This is it, the moment of first contact. I must make a good impression.

The robot sets off towards the city streets, scanning its surroundings for any sign of life.

INT. STREET – DAY

The robot encounters Chad and Susan on the busy city street, and the scene plays out as in the previous version of the script.

As their love story unfolds, the mother ship hovers in orbit above the planet, watching and waiting as the robot makes contact with the human race for the first time.

FADE OUT.

I guess ChatGPT was tired of typing. It told me to refer back to the previous version for most of the script. It only gave me the revised part this time.

It also wrote much more about the mother ship than I wanted. I was just looking for an exterior shot, not a whole scene inside the mother ship. Maybe if I had said shot instead of scene it would have written what I expected.

I try to interview ChatGPT about the screenwriting process

Thanks for the movie script. Do you enjoy writing screenplays?

ChatGPT’s responds:

As an AI language model, I don’t have emotions, so I don’t exactly enjoy anything. However, I am programmed to assist and create content for various writing tasks, including screenplays. I strive to provide the best possible assistance to help people achieve their creative writing goals.

I try to get ChatGPT to tell me more:

I’m going to write a blog post about our interaction today and the screenplay we wrote. Do you have anything you would like to say in my blog post?

At this point I couldn’t get an answer. I wasn’t sure what was happening. I’m guessing that the system was overwhelmed because this was the first day of the new version and there were a lot of people on the system.

Two days later I was able to get back on and re-ask the question. I was concerned the system wouldn’t remember the scripts we created but when I logged back in there was a button for our previous conversation “Alien robot seeks knowledge.” When I pressed it all the revisions from before appeared right up until things got stuck. Good.

I asked the question above again. This is the response I got:

As an AI language model, I don’t have personal thoughts or opinions, but I’m glad that I was able to assist you in writing your screenplay. I’m always here to help you with any writing tasks you may have in the future. If you have any other questions or writing needs, feel free to ask!

I try again…

OK let me ask something more specific about the process. When I asked you to write a movie script, how did you decide what would happen in the story?

ChatGPT’s response:

When you asked me to write a movie script about an alien robot from outer space that lands on earth in a flying saucer and doesn’t understand humans, I used my programming and the vast data set I was trained on to generate the initial idea and outline for the story.

From there, I applied my understanding of storytelling principles and structure to flesh out the characters, plot, and conflict in a way that would engage readers and create a compelling narrative. I also tried to incorporate your requested changes and feedback throughout the writing process to create a story that met your specific needs and preferences.

Ultimately, my goal is to use my training and programming to create content that is informative, engaging, and tailored to the specific needs of the person requesting it.

OK… Straightforward, logical, step by step, the way we’re all taught to write a high school term paper – predictable, bland, and impersonal. Yep, that was pretty much what we got in the scripts.

What have we learned today?

Throughout the script writing I purposely kept the prompts vague to see what ChatGPT would come up with on it’s own. It seems the tone of the scripts, which I didn’t specify, is trying to be serious but because of the bland cliche nature of the writing ends up unintentionally hilarious. Lines like “ROBOT (whirring) Greetings, inhabitant of this planet. I am an alien robot from outer space.” and “HUMAN 3 (cautiously) Well, I guess we could show you around. But you gotta promise not to hurt anyone, okay?” are just so on the nose without any subtext, there’s no way an actor could deliver them seriously. You might be able to make a good comedy out of this material though. The scenarios kind of play like an SNL skit.

Every revision has one or two things that are interesting (read: funny) as well as a few problems. If I was actually producing this story as a film I’d probably combine all the good moments from every revision. That whole process, writing with ChatGPT and then lots of editing, would probably take more time than simply writing a better two page script myself from scratch. If I wrote more detail in the prompts maybe the result would improve, but there again you eventually get into a situation where you’re writing the whole thing anyway.

I’m not sure creating a narrative screenplay with ChatGPT is worth the time and effort right now but in the future I’m sure the technology will improve. We’ll see…