Recently I was experimenting with the Midjourney AI art engine. I saw an image in my mind of a robot backlit by a red ring-light. I typed it up as a prompt:

Recently I was experimenting with the Midjourney AI art engine. I saw an image in my mind of a robot backlit by a red ring-light. I typed it up as a prompt:

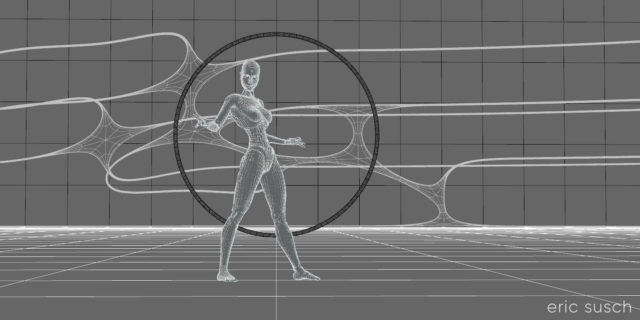

An intensely bright thin red ring light in the distance, a woman robot in silhouette, on an abstract shiny metal plate surface, hyper realistic, cinematic, dense atmosphere, intense, dramatic, hyper detailed, –ar 2:1 –v 5

I expected to get something like the image above. That’s not what happened. For the next hour I tried to get Midjourney to build something even close to what I envisioned. I typed and re-typed the prompt, changing the way I described the image. Most of the time I couldn’t even get a red light ring. Midjourney kept trying to make a “sun” with a red sky. There are round portal structures, some even reflecting red light, but almost none of them light up. The light’s coming from somewhere else.

Midjourney kept trying to make a “sun” with a red sky. There are round portal structures, some even reflecting red light, but almost none of them light up. The light’s coming from somewhere else.

What I asked for was simple. Why is this so hard?

I’m guessing it has to do with the data set the AI was trained on. I bet there aren’t that many images of red light rings in there, maybe none at all.

One of the things that frustrates me about AI art is the way most things turn out looking generic, like everything you’ve seen a million times before. This makes sense of course, because that’s how it works. It studies what everything looks like and then create from that. It’s almost a creation by consensus. An unusual Red Ring isn’t part of the equation. I could probably eventually get to what I wanted if I kept trying and perhaps made the prompts much longer describing every detail. Maybe.

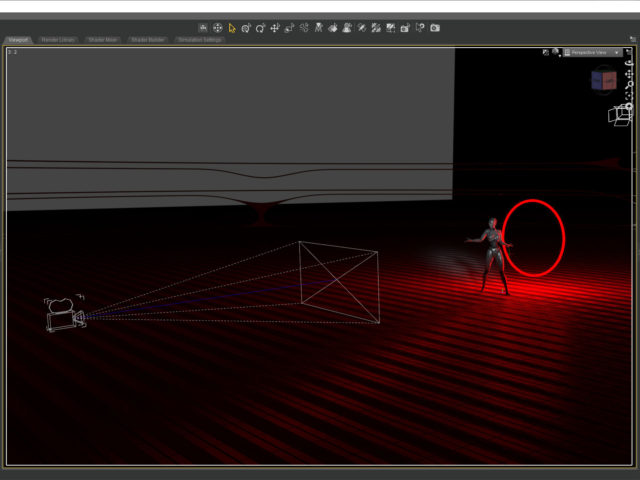

Or I could do what I did and create what I saw in my mind with CGI.

Has this put me off AI art? No. Every tool is good at what it’s good at, and it’s not at what it’s not. I was looking for the edge of what this new tool could do (because that’s where the art is) and I found it. There’s nothing really interesting right here but there’s a lot more to discover…

Has this put me off AI art? No. Every tool is good at what it’s good at, and it’s not at what it’s not. I was looking for the edge of what this new tool could do (because that’s where the art is) and I found it. There’s nothing really interesting right here but there’s a lot more to discover…